In a world where information is faster to access than ever, the real edge lies not in speed, but in substance.

Large language models now make it easy to gather, summarize, and explore ideas at scale. But when the stakes are high – like launching a product, navigating regulatory shifts, or spotting trends before competitors – depth, nuance, and contextual judgment are what separate noise from insight.

Combining AI with lived experience, unpublished knowledge, and strategic judgment – you get answers no single system – human or machine – could reach alone.

This is how expert-led research is evolving, and what it looks like in practice.

In the spotlight:

In a recent interview, I spoke to two industry pros who’ve each worked on hundreds of projects, helping the world’s top companies with their supplier challenges.

- Maikel Boot, Technical Director of Biotech, Pharma, & Healthcare Innovation at PreScouter

- Ryan LaRanger, Technical Director of Scientific Innovation, Markets & Regulatory Strategy at PreScouter

The Pain: Why “good enough” AI isn’t good enough

Today’s language models can give you a head start. They synthesize knowledge from across the web, draft documents, clean up messy CSVs, and even help write custom code.

But they can’t tell you whether what they’ve synthesized is complete, correct, or meaningful in context. We love AI chatbots, but they’ll happily parrot claims that no human expert would take seriously.

“One of our clients wanted to identify companies using AI for sensor-based failure prediction. The AI flagged a company with the most impressive claims. But after reviewing their papers, it turned out to be mostly hot air.”

– Ryan LaRanger

This is where most AI use cases still fall short: surfacing surface-level insights while missing the underlying truth. The deeper context is often buried in places no LLM can access – inside expert minds, proprietary platforms, and lived experience.

When there’s no public answer:

Often, clients ask questions for which no public answer exists. That’s not a failure of the internet – it’s a reflection of how much real knowledge lives outside it.

Physicians also point out how AI can’t yet recognize body language, tone shifts, or hesitation—the very things that influence real diagnoses. Researchers share how negative results—what doesn’t work – are rarely published, yet critical to understanding a field.

“I once spent a year solving a problem in my model. Then I spoke to someone at a conference who said, ‘Oh yeah, that happens all the time. We just work around it.’ It was never published. But everyone in the field knew.”

– Ryan LaRanger

That tacit, unpublished, and sometimes intuitive knowledge is what keeps humans irreplaceable – for now.

From Qualitative insight to hard analytics:

This kind of bespoke synthesis – combining unstructured data, private insights, and human expert heuristics – is becoming the norm, especially in sectors like healthcare, diagnostics, and advanced materials.

“We’ve had to create completely new analytical formulas because the data didn’t exist. We couldn’t find the exact values, but we could create a model close enough to guide decisions.”

– Maikel Boot

How does the team do this?

- Take unstructured interview transcripts, extract specific information (e.g. rough market sizes or prices) and turn them into quantified information.

- With enough interviews, you start to build a datasets of numerical, quantified information based on sampling experts in the field

- Once multiple datasets have been created, it is possible to develop charts and analytics to answer previously unanswerable questions for which no data previously existed.

As the team often hears from clients:

“You can download a market report or dashboard off the shelf—but odds are, the categories won’t match what you need. [So instead] we build them [analyses] around the client’s real decision-making categories.”

– Maikel Boot

A big part of this work is turning insight into action—often these custom analytical dashboards.

But here’s the key: these dashboards aren’t built from templates. They’re co-developed with the client to answer precisely what they care about.

Even for something as technical as regulatory monitoring, it’s not just about translating and summarizing documents. It’s about helping clients understand why it matters and what to do about it.

A worked example:

(1) Take quotes from interviews and map them to specific value points:

- “At $400 the spec is fine, but at $600 I’d expect 80+ TPS throughput.”

- → Performance threshold

- “Once you cross $700, you’d better be well above the market leader.”

- → Price ceiling

- “If it scores ≥85 on the benchmark we’d pay a premium.”

- → High-value tier

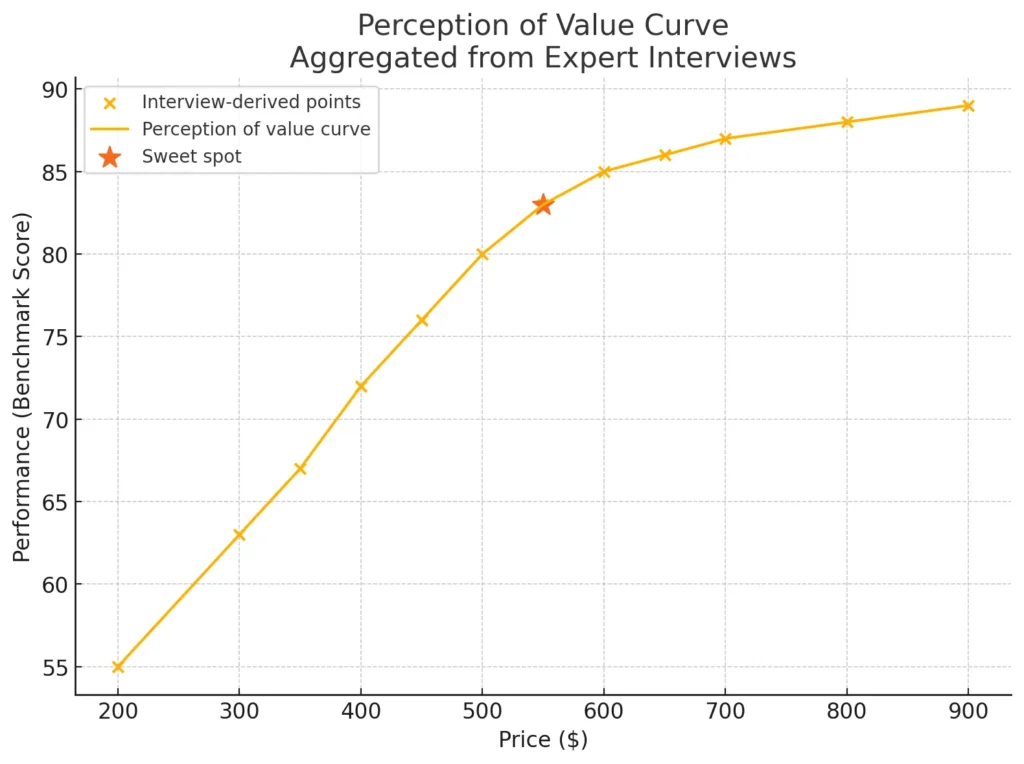

(2) Visualize the “perception of value curve”

The chart you see above transforms those themes into aggregated data:

- Interview-derived points (×) – one per expert or market segment

- Perception-of-value curve – the smoothed frontier that traces the best-accepted performance for every price

- Sweet spot (★) – where marginal willingness-to-pay begins to flatten; the candidate spec/price you pitch to management

(3) Answer Executives’ Questions

Use this curve to answer three executive questions at a glance:

- “Where is the entry-level vs. premium break?” – The slope changes around $500.

- “What spec lets us command $600 without overshooting?” – ~83 on the benchmark.

- “If we over-engineer to 90, what price ceiling appears?” – Nearly $900 before value flattens.

Where this is going:

Some speculate specialized AI agents are on the horizon—models fine-tuned to specific domains, with deeper reasoning built in. But we’re not there yet. Until then, the winning strategy is simple:

- Let AI boil the ocean of data

- Source undocumented knowledge from experts

- Build custom dashboards that connect the two.

- Let experts cut through the noise.

Because in the end, accurate insights come not from publicly available data alone. How these insights are framed, challenged, and refined by experts is also key.